Survey Aims and Design

In the Fall of 2023, we conducted a survey seeking feedback from Canadian public university general research ethics board (GREB) chairs and ethics office managers/supervisors. It was distributed in English to English language Canadian public universities and in French to French language universities. We sent the survey to 74 Canadian universities, and got 40 responses.

The purpose was to investigate how GREBs view research projects that include web scraping, AI, and LLMs. Specifically, we were interested in how often ethics applications were submitted for projects studying such topics. We were further interested in their views regarding Bill C-27. This included whether the changes in such legislation might impact the way research projects can be conducted and/or reviewed for ethics requirements. Finally, we were interested in what respondents felt was needed in order to ensure that all web scraping, AI, and LLM research projects were submitting ethics applications when required. We wanted to know what types of support are needed (whether internally from their institutions or externally from the Tri-Council) in order to better evaluate ethics applications that included web scraping, AI, and LLMs.

Result 1: AI Researchers may Under-use Ethics Approval

Eight (or 20%) indicated that they did not know how much AI research makes it way through the ethics approval process. However, 15 (or 38%) suspect that less than 20% of AI research gets ethics approval; 7 (or 15%) estimate 21-40% of AI research gets ethics approval; 4 (or 10%) estimated 41-60%; 5 (or 13%) estimate 61-80%; and only one (or 3%) estimate 81-100% of AI research goes through the ethics approval process.

Respondents were then asked to rank the reasons they believe AI research does not go through ethics. The following were the top 4 reasons in rank order:

- Researchers are unaware of ethics requirements.

- Ethics approval is not required.

- Guidelines for ethics approval are unclear for AI research.

- Approval process is too slow and/or difficult.

Result 2: Lack of Guidance on AI Research Ethics

Respondends were then asked to speculate on what would need to change in order to ensure more AI research goes through the ethics approval process. The following are the reasons selected by 30% or more of respondents, ranked by popularity:

- Clearer guidelines around AI research in the TCPS2. (34 or 85%)

- Clearer understanding by researchers around the data used in AI research. (24 or 60%)

- Increased understanding of ethics requirements by researchers. (23 or 58%)

- Clearer understanding by ethics boards around the data used in AI research. (22 or 55%)

- Increased understanding of ethics requirements by university admin and/or research office. (15 or 38%)

- Clearer guidelines around AI research ethics in federal and provincial legislation. (14 or 35%)

There is a good chance that AI researchers already know a great deal about the data used in AI tools and AI research, but they do not understand whether that data requires ethics approval to use. Much of the research conducted in computer science and engineering does not require ethics approval as it does not involve the use of human participants (nor their data). Therefore, researchers in these areas are not as familiar with the ethics approval process or the TCPS2 in the first place. The most popular change that has to occur, based on the opinions of the respondents, is to provide clearer guidelines around AI research in the TCPS2. Without such guidelines, it is difficult for GREBs or researchers to know when and if a specific AI research project needs ethics approval as the type of data used, and the way in which it is used, is not clearly discussed in the current version of the TCPS2.

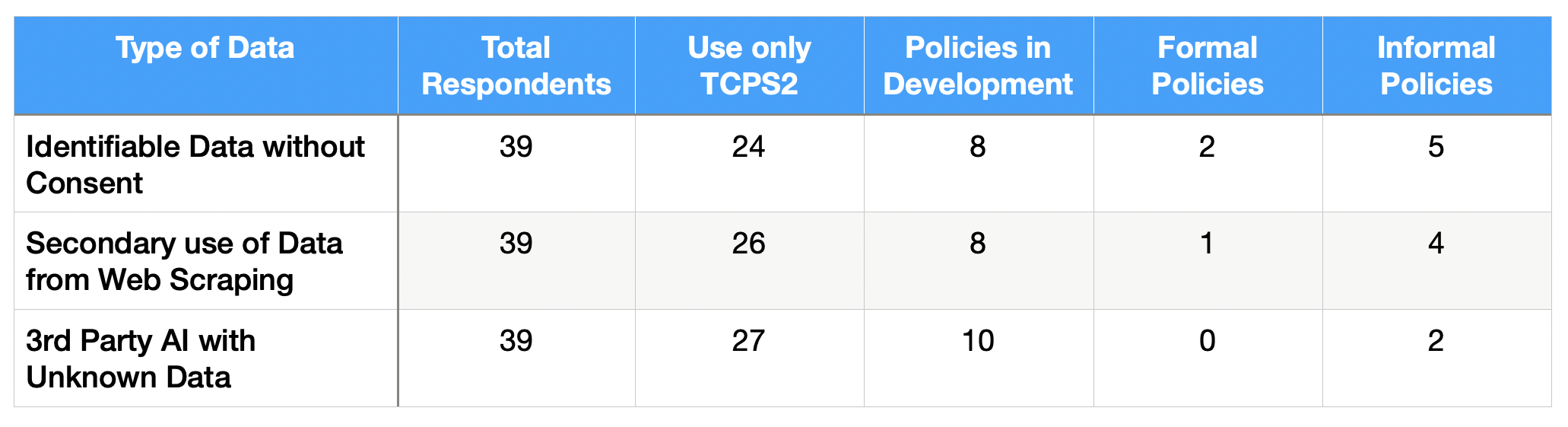

Respondents were asked whether their GREB or Research Ethics Office had any policies, procedures or guidelines in place or in development about the kinds of data used in AI research. About two-thirds of all respondents are only using the TCPS2, as currently written, or have not yet considered the impact of the type of data in question. As shown in the table below, around one-quarter of respondents have some sort of policy, procedure, or guideline in development about the data in question, while only a very small number of respondents have either formal or informal policies, procedures, or guidelines already in place.

We also asked a series of questions about hoe the proposed Bill C-27 will impact the work of GREBs. The majority of respondents (33 out of 37) had not yet had the time to consider how Bill C-27 will impact their work.

Based on these survey results, we drew a series of recommendations for the Tri-Council and GREBs for how they might improve transparency and procedures around AI research ethics. Please see our list of recommendations.